What's the INCRMNTAL Measurement Methodology?

Summarizing the methodology of our measurements, and how the incremental value of changes in spend is calculated by our model

The Required Data for your Board

Our incrementality measurements rely on 2 data points provided by you:

- Costs for every marketing channel you're running

- KPI metrics (as per your choice), and their reporting per day, country, and OS

The 3rd data point, which is the core of our measurement methodology, are the marketing activities across your various channels.

Combining authentication with ad platforms, and anomaly detection, the platform annotates changes (“activities”) made in your operational marketing activities: bid changes, spend changes, channels / campaigns stopped / restarted and more…

When onboarding your data, we would typically request for several months of historical data (aggregated only), allowing the platform to understand how does your typical seasonality look like, and allowing the platform to detect activities.

The Measurement Process

Whenever measuring an activity (example: a budget increase for a channel), the platform creates a seasonality analysis for your metrics (whichever they are), and creates multiple timeseries utilizing each and every overlapping channel, campaign, ad group based on the marketing activities the platform logged.

The platform utilizes the context of each and every activity to create a prediction, showing “what would have happened if you did not make the change (you're measuring) ?”

To answer this question, the prediction model practically needs to be agnostic to the context of the activity you wanted to measure. I.e. the prediction should behave the same if you increased the ad spend by $100 or $10,000

Each measurement process will utilize the context of all parallel activities, including the ones happening on the day of the activity measured, while ignoring the context of the activity measured.

- The prediction is composed by 9 separate models (predictions). Some of these are treating short/long term seasonality, and the rest are about timeseries analysis, and inclusion of external “features”* to the model.

*(A feature can be your own indexed data, or external data points - anything from weather, to stock market tickers, google trends and so on…) - The timeseries analysis over marketing activities utilizes the past 45 days before the activity, and up to 14 days from the activity to build the prediction.

- The 9 models are all scored, and only models passing a certain threshold get ensembled into a singular model.

- The singular ensembled model is re-run for the entire period to build the prediction - this is our model training period.

- The validation process takes the 7 days before the change as an Out of Sample validation period.

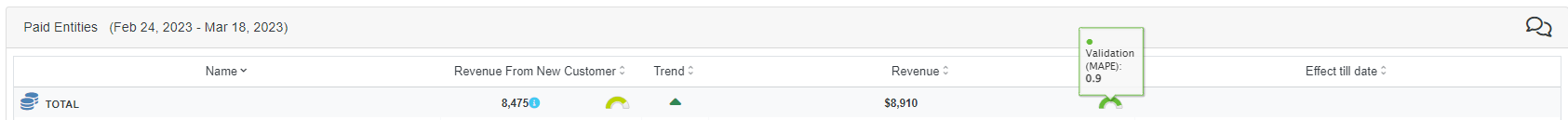

- During this time, we calculate the MAPE - mean absolute percentage error, for each of the created 9 predictions for each KPI. It is also presented in the result for your convenience.

- The complete model will be run again during the training period, and will build the prediction for the 14 days after the change (which is the final result of the prediction you see in the result page).

- The prediction is wrapped with a 95% confidence interval (by default), and what we attribute as the incremental impact are the points where the actual (factual) goes beyond the prediction confidence interval range

Model Validation

You should know that both the training period and MAPE are presented as part of the results. We believe in providing as much transparency into the methodology as possible.

When the platform recognizes that more than one activity happened on the same day as the activity you wanted to measure, the platform will run through the prediction process for each of the activities , utilizing the same methodology process as described above.

You can read more about the platform's methodology on splitting the contribution of each of the activities on the same day here.

For any question please contact support@incrmntal.com , or open a support Ticket here